Reposting this in a different format. Basically considers not fully implemented ideas concerning edge contour curve construction, and delves into the matter of how one might terrace model a terrain mesh object.

I have considered the following solution as a thought out non implemented exercise to a problem which not only involves terrain edge detection but edge detection at a given height, hence defining a surface edge contour curve.

The problem defined here is as the title states a terracing problem. One can visualize this with real world examples found throughout the world, in particular in the use of land space as in the case of agriculture, say rice plantations on a given mountain side, or sweet potato plantations. Here we descriptively define the use of such space as increasing a given elevation surface area of land space by cutting into a given hill/mountain side until having reached a terrace’s defined spatial surface area allotment. Then commencing the process of terracing the procedure is repeated on subsequent higher elevation layers until having reached a maximum height of such hill/mountain side.

Some alternate descriptive ways of characterizing a terrace as follows:

- A terrace can be also defined as a land surface contour where the contour of the terrace represents some closed loop of a given surface region.

- The surface area of contour loop may be thought of as in the layered cake analogy of a particular region that is extruded to some height level, and that a neighboring terrace is a contour loop that is either nested and extruded or contains the nested extrusion of any subsequent contour regions.

Defining the Contours of the Map

Consider the mathematical descriptions and possible requirements involved in defining a contour of a terrace:

- Cataloging all known height positions.

- Checking for contiguity between positions...this means checking to see if the contour is closed loop passing through any similar height map position, or having defined a contour loop in its own right.

- Linearly interpolating between points (an edge), to determine where a non identified vertex need be created potentially in further identifying a contour.

Once having defined the contours of the map, one can imagine a top down topographical approach here. That is, a two dimensional map has defined curvature that shouldn’t be like a hiker’s map in so far as defining elevation curvature of a given landscape. A similar construction could be applicable in the aid of the terracing problem likewise.

For a given contour, for points that are not defined on the grid (conforming to, for instance, a defined grid subdivision), one could define this more clearly to the minimum spatial resolution (if desiring equal spacing between all grid points), or having used an approximation such as choosing a nearest neighbor assignment in further defining the contour.

If we restricted our terracing problem in way that we are restricting the maximum number of potential terraces, then the problem is potentially likely a destructive or constructive process, depending on the number of potential identifiable conto urs present.

For the destructive or constructive case, likely we’d want to, according to scale, consider the maximum number of contours distributed in some fashion across the scale of such terrain. That is, we’d work upon the principle of best matching a terrain terrace height elevation to identified height map regions on the grid. In this case defining matching and neighbor proximate vertices within these terrace elevation regions.

Thus likely the problem could be defined in a number of variational ways here.

Nearest neighbor vertex solution

Let’s say we have the following

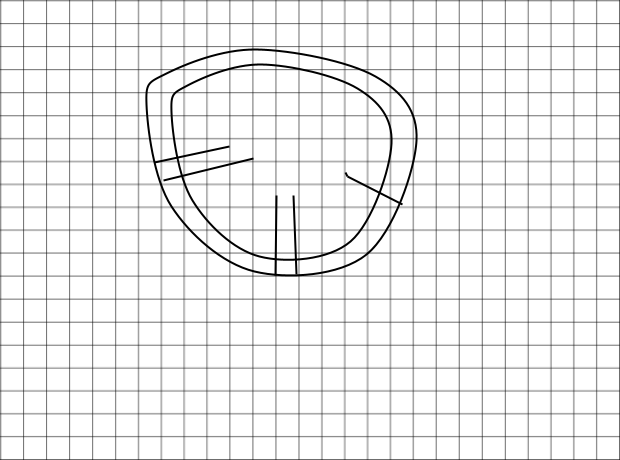

Obviously in the figure we can see a two dimensional representation of a top down contour shows how many potential nodes are missed for a given grid of a provided resolution.

One solution to this problem is to choose nearest neighbor points representing the contour.

Here we can see this is an approximation to the contour line. By choosing the nearest neighboring point we can ensure that the contour is more closely represented to a fixed dimensional grid size...where vertex position points, for instance, are spaced metrically in equal ways from one point position to the next. The trade off on this with lower resolution is that there may be more significant loss in graphic data representing the curve.

Since mesh objects can be represented, however, in various ways, we need not alway represent say a contour line by way of equally spaced points either in representing such mesh object. Meaning we can also choose to structure an object with vertices as we choose. In this way we can also re represent a curve conforming to a new set of points

Changing the resolution of the grid

While the nearest grid point choice method, allows, still allows a contour to be better represented in terms of data on a given fixed resolution grid. One might also consider changing the resolution of the grid. This amounts to increase the amount of potential vertex data for a given terrain mesh object. Thus coupling this with a nearest neighbor vertex method, one might reduce loss/change in geometric data, but also add potentially increasing the size of such data by increasing the resolution of the object (that is, by increasing the number of vertices representing a given terrain objects form).

Re representing the curve

In the next sequence we merely re define the set of mesh points using an algorithm which structures the set of mesh points according to a defined curvature. We can actually define such curvature, for instance, using a cubic spline approximation along the loop, and thus defining equal point spacing on a given contour by using something like arc length formulation piecewise for each spline equation. While this defines probably a better approximation for a given mesh contour along the terrace, however, this does present problems for defining the mesh object further in so far as say faces and/or determining the application of texture objects. Secondly we for a given mesh object whose vertex spatial arrangement is given according to a uniquely defined contour surface based coordinate system, we still contend with the issue of determining inside such curve the coordinates of points. We could use something like surface based normals alongside a scaling procedure which determines the next set of interior points, repeating this procedurally as necessary until having reached an adequate contour welding surface to any neighboring contour. Admittedly this is also a much more complex mathematical prospect, although one should imagine if having been completed successful could provide for arguably the least geometric loss in data.

Procedurally not only would one need contend with drawing the contours at any given heightmap level, but again determining based upon the set of edge normals provided from each edge welding faces from one contour band to the next. Higher resolution data, not doubt likely reduces potentially the difficulties encountered in such problem, although something like a normal lines from neighboring points intercept problem could be key in solving this sort of problem…

The diagram to the left illustrates for instance, roughly drawn normals relative to contour lines. Ideally the same number of points are drawn even as the scaling of the contour line diminishes, which is to say that as the contours become smaller and smaller in terms of arc length the number of vertices remain the same. Thus the faces diminish in surface area size as the contour arc length becomes smaller.

There are some problems, however in the distribution of points per contour surface area. Consider the sawtooth ridge problem. Here if a given ridge line were drawn as one contiguous closed loop boundary, we’d likely have the same problem as a continuously drawn set of contours, that ideally under rescaling reached an apex where total points distribution were one and the same. However, in the non contiguous sawtooth ridge problem, we instead subdivide the ridge line into several closed loops and at some point must distribute between these closed loops a total number of points in keeping to the original contiguous closed loop problem. That is the spatial distribution of points might like similar except subdivided between closed loops at a given apex. Obviously we’d need to account for repetition of points along such boundary curvature like wise as illustrated below…

Here the dotted line in the figure might represent for a given spatial area a contiguous ridge line (which aids in the formation of a solution) since this dotted line approximates what a total distribution of points would look like, we could then approximate a distribution of points for each peak. In this case a distribution, for instance, might be given according to a matching of spatial area as related to the contiguous case.

This particular problem also may be best approached in a different way entirely. Which is that one might instead define a contour say from a base level, and then attempt to path construct an edge normal which should intercept adequately a neighboring contour normal. This ostensibly turns into something of a path finding problem with optimizations since one need to join two normal edges ideally so that as close to a linear approximation is yielded in the process of finding an normal edge relative to either defined neighboring contour curve.

If between two contours a non adequate path is found, perhaps, an intermediate contour might be constructed which parses differences between both such curves so as to situate a path as close to being a linear normal edge as possible?! In any event, one might also resort to constructing normal curves within tolerances ranges for path construction. Once an adequate normals path resolving method is found. For any arbitrarily chosen point on the contour curve, and given arc length point wise distribution (for a given curve base), one can commence building the set of normals path wise along the perimeter of a base contour, until having traversed the set of all potential points on a given circumference. Reminding in theory we should be able to do all this as long as we have piecewise built the set of all contour curves with their corresponding families of cubic spline interpolated functions...we’d start by computing normals of such function say between cubic spline point intervals on the contour. This leads into another potential algorithm described below.

Path wise intermediate contour construction algorithm

The example works as follows, between two neighboring contours pathwise normals are constructed and we optimize on a given inner curve the a point normal which closely approximates as needed a linear pathwise normal curve between both such curves, but that such point still neither yields a pathwise normal say on the inner contour within a prescribed threshold range. In this case, we can draw from either point normal linear curves and then compute an intercept point. From this intercept point we can draw another curve that more smoothly transitions the pathwise normal curve, defining an intermediate contour. We could fill in the details of the intermediate contour, however, a bit differently than as in the previous set of algorithms which constructed a contour set. Instead the path wise normal determines the two dimensional coordinate positions with a defined function for such normal (in this case we are likely resorting to another interpolated function of second or third order criteria). Secondly along such path with regularly defined contours of a given metric definition (whether this were spacing defined in regular intervals of a few meters elevation), we could resort to some regularity provided by average on such pathway. If such spatial length were distributed at say 40 meters elevation, then the path’s mid elevation range on average would be 20 meters upon normals intercept...or however distant in scaling relative to a midpoint or the end points in determining the elevation for an intermediate contour. One particular topographical rule is that at least contour elevation bands themselves may be set by some regularized interval (whether this were 5, 10, 15, and so forth meters). The spatial distance from normal point to normal point on the other hand between neighboring contour curves, on the other hand, may vary so that larger scale elevation change has taken place with relatively small path wise normal distance gained. A path wise normal curve, also, optimally represents on the family of contours, the shortest path for maximum elevation change, or in field topology, for instance, may represent the direction of say ‘force’ on a given field space….consider the example of gravitation space time topology...the normals on such field contour would represent the direction of force at at any given point on such space time continuum. The advantages also in terms of field topology having terrain mesh topology constructed in such a way, is that these may compliment field simulations. Consider for instance, the direction of hydraulic terrain deformation characteristics. More easily if these normal contour paths are determined in the readied sense, computational expense may be less so if considering say the problem of probabilistic path of water transport and sediment deposition. Of course, this does lead to an added problem of reconfiguring curve contours on a post deformation cycle.

Constructing the contours

This problem can be considered in a number of ways. If it is a topographical map, that one had in hand, one would simply need scan an image while simultaneously threshold reading relevant versus non relevant data. In this case, we’d likely have depending on the resolution of the image a bit mapped representation of the image, which means that our contours would need be interpolated pointwise in defining topographical data.

Another problem is reading the data say from a mesh object with a more commonly given equi distant metric provisioned on a terrain mesh object, this tends to be common for non organic mesh representations while organic structures on the other hand might have topology defined more closely in the way of topographical contouring. In the event of contouring an object say using Cartesian based (affine) coordinate systems, you’d need to have linear interpolation methods set in determining where for instance, points on the mesh objects correspond to a given contour, or constructing contours using contiguous closed loop determining routines from limited mesh object spatial data sets. What one should have for a given contour is not a set of points (although we could define a contour in this manner) but a family of functions say for point wise intervals (one could set this interval at something an equidistant range of 1 integer unit, although this equidistant range need not be anything as large as one integer unit)...I don’t recommend integer unit intervals ranging significantly large since cubic functions become unstable over larger numeric intervals (these will lead to pronouncements in say path oscillations which renders stability in curvature useless). This leads to a subset of the problem at hand…

Determining a contour cubic spline function for a given contour interval

First in this problem for the described interval, it would be presumed one have adequately mapped a set of points. In a rough way, one should have an algorithm which sketches out a contour (in a preprocessing manner) one might imagine. Part of this pre processing appending/mapping of data, may look for things like nearest neighbor points for a given curve contour...this is not simply an algorithm which matches all points to a given terrain elevation, but also need sense points that may be contiguously drawn into a contour in its own right. Consider the twin peaks problem where two peaks may share up to a point similar contour bands but are non contiguously drawn between banding regions. Thus one should have a property which determines that a contour may be drawn from such point to a neighboring point of the same elevation...thus some search criteria means that not only a point shares the same elevation, but that edges along such path intercept the contour band. It may help in principle also using a process of elimination when having constructed a sequence of contour points. Discarding points in the algorithm that have already been appended to a contour region. Ideally what one should have in the preprocessing stages are not only some definition of the contour region points, but that the spacing of points are ideally proper for spline interpolating...ideally these could be in whole integer units (and one may need some procedural method for normalizing the set of data so as to make this condition mathematically proper and ideal for interpolating). Linear interpolation will obviously come in handy since edge making algorithms not only tell us without computing the neighbor points on such edge that an a contiguous contour may be defined, but that linear interpolating processes will tell us exactly where such edge intercepts on the contour’s two dimensional coordinate plane. In this respect, it would be expected that the process of determining contiguity also has furnished a necessary spatial arrangement of data (given pre processing normalization). Once such data is readied then it is merely a matter of processing such data from the cubic spline interpolated process on the the two dimensional contour plane. In this way, the simplest algorithm it suffices would likely have some computed slope data provided (say left approach one boundary point and left approach simultaneously on another boundary point), obviously the boundary points themselves should have been computed in the preprocessing stage. Then we’d merely append the cubic function coefficients and procedurally repeat this process working circumferential around the contour curve until having amassed for such curve the family of functions describing the curve with corresponding map provisioned between point intervals, both x and y...keep in mind we’d have two matching local x coordinate position for two separate functions so likely a two coordinate key tuple matches best the coordinate to function. Procedurally we repeat this sequence for the set of all contours on a banding region, and then repeat this process yet again for all such delineated contours.

Some added things for consideration especially in pre processing data include:

key indexing data for fast retrieval...consider the neighboring point problem where a known neighboring interval is being sought after for a given point. In this case, something like the moore neighbor search problem, or von neumann problem I’d imagine suffices. Thus pre processing neighbor point key data for a given point could readily speed up a given search for neighboring points on the search algorithm problem. Again one isn’t merely searching to see if a neighbor point is at such a height, but that it has an edge crossing such contour boundary….even if a neighbor point is at another heightmap value greater or lower than a respective contour position this doesn’t meet sufficiency for the contour criteria, a neighboring point could be proximate at the same contour height but doesn’t have an edge that crosses the contour plane, and is technically interior to the contour curve (that is, define and defining any other contour curves but not the one that we are looking for)...such a point must have an edge that crosses the plane of the contour, or be positioned exactly on the contour itself. Imagine the cross section of an apple, the edge defining the contour at every such horizontal cross section of the apple (xy plane). In this way, if a point on the apple also intersecting the plane of such contour isn't defined, an edge between vertices on the apple should be crossing and cutting into the contour plane for such given cross section.

Having this one can then proceed to the next step…

Computing the normal of a contour curve

The family of function provides this answer to us in directly via calculus, for instance, and a bit of trigonometry. We can compute the tangent of the curve (first derivative), and then compute from this the normal using trigonometry on the tangent line (90 degrees relative the tangent). Other methods for computing normals from a known equation may work likewise. It is important keeping reference to direction, there are actually an infinite number of normals (rotated around the plane of a tangent curve). We are actually looking for a normal curve that is on the contour plane, and is likely specified with direction since path wise we’d likely be moving in one direction along such normal to the set of interior contours.

Optimizing the path normal between two contour curves

If such a problem were given to the simplicity of a rescaled contour curve, likely there would be little work needed in finding point intercept on a neighboring nested contour curve, since this position should using something as simple as point slope formulation...that is a linearly projected along the path normal intercepting the interior contour curve represents the normal also to such interior curve.

In principle this approach could be applied likewise, and then a given test performed to see how the direction of the normal (on the point slope intercept) compares to the point interval’s normal (from the contour’s function on such interval). In this case if there are significant deviations between the point intercept normal and the interior contour curve normal, then one may need consider constructing a set of pathwise contours which transform the path normals to reconcile better to desired tolerances. The idea here is subdividing say the ‘error’ between two curves neither matched at a given point for curvature. Methods for smoothing a curve’s ‘error’ can be done also by again interpolating a normal pathwise curvature. Where boundary condition endpoints are determined and normal’s provided by either boundary points are again provided which in turn provide first derivative conditions of the path wise normal...in this way we have all the ingredients lined up for another cubic spline interpolated normal curvature along the path normal. Once having this we can articulate the curve by determining intermediate contour points which describe more smoothly transition from one one contour region into the next. Technically if this subdivision were infinitum, there would be no discernible ‘error’ given between contour transitions, and the normal path would be perfectly described as perfectly normalized at the intercept of each contour curve.

There are some caveats to a path tracing algorithm in finding a best fit from one contour curve to the next. Optimization should include that the path is the least linear distance in summation between both points from their respective point normal curves. This is not by the way the least distance as given by euclidean distance in a cartesian coordinate system between two contour curves. The optimal path is determined rather by the path of normals which can be thought of as an extension of a local surface coordinate system. It is implicitly assumed that by extension of the most optimal linear path, an optimized cubic equation will likewise result in terms of arc distance. The optimization routine for choosing such path could use something like an iterated newton’s method...basically from a test point value if path distance appears to be growing larger, then on an iteration cycle an upper bound is constructed on the previous iteration, while refining a midpoint iteration between the upper bound its parent lower bound. In this way we hone in closer and closer to an optimal point, in a given testing routine. One can likely set an upper limit in ‘error’ testing between the any previous iteration successor and a likely most optimal point candidate, whether this is a very small decimal point running, for instance, in the range of .000000001 or something like this, generally this all being contingent on desired computational expense. Likely I visualize this problem as optimizing in the linear case, and then constructing the cubic equation describing such path, otherwise, added computational expense occurs both in computation of the cubic equation and its given arc length measurement. Obviously the linear case, is quicker and easier.

From a given base subdivision of a contour arc we can apply rule for as many points on any closed contour loop. The first ancestral successor likely is given to an algorithm of our choosing in so far as the allocation of points subdividing the contour. Any given generational contour successor will already uniquely through the path routine describe above have its assignment of points on the contour curve, so one only need construct, in theory, one particular arc length subdivision on the curve. All other contour curve subdivisions will follow suit from this ancestor.

Additional Rules for Contour Loops

We can additionally consider the construction of some following rules for contour construction:

- A contour that has no child successor will be given to a midpoint subdivision...this is ideally a mid point arc that that traverses the length of the contour curve.

- Any series of contour curves in a banding region given to an absence of child successor contours will have a midpoint arc that flows between such contours forming an inter connecting bridge between such loops, or in other words, where a parent contour has more than one child successor means that midpoint arc bridge should be constructed between such child closed loops. Consider, for instance, the saw tooth example given above. We’d construct between the peaks a sub ridge maximum arc curve subdividing this parent contour curve of the saw tooth peak contours.

Really at the moment I can only visualize the necessary special construction rules in these two special cases.

The construction of the midpoint arc curve may be given by the following process for rule 1:

- We’d follow a similar procedure to the normal path optimization routine, except the mid point will be constructed on special case premise. An optimal path will be constructed from a pair of known points since both points will have been determined.

- Once choosing an optimal point (opposite side of the contour curve), a cubic curve can be subdivided at approximately equal to ½ its total arc length…

- Once having appended all such points on the closed loop we can construct the final midpoint ridge of the closed curve.

The construction of midpoint arc curve for rule 2 on other hand appears to be a composite of standard rules and the rules given:

- Where no child optimal point successor is found and that it appears that an optimal point on the parent is instead found determines the use of such parent point (opposite side of the contour curve).

This provides more or less a description of the processes involved in contouring a terrain. Likely given that terrain is appended in terms of surface topology that is euclidean in nature...one may need weld any of this topology to an existing framework in providing compatibility, or otherwise re translating...some game engines like ‘unity’ will not accept anything but a standard euclidean based surface topology (where the spatial surface metric between points is the same). On the other hand a number of three dimensional modeling programs may easily accept any of these types of surface topology. In ‘Unity’ you’d likely import these terrain structures as mesh object.

Building the terraces

Once having a set of contours plotted two dimensional in any event allows one either by extrusion, or by some method of translating points on the contours. Most of the work is completed at this point, and all processing of data up to this point provides relation to contour data. In this regard, contour data contain level banding data, meaning that terrain data is given at a constant height between contour regions, and that the contours themselves represent the change in elevation relevant to any successor region that is banded at higher or lower elevation. If creating a family of functions mapping representation of the a given surface topology, as has been the focus of much of this writing up to now, ultimately there would be a transformation of functional data into object data points, like vertices and their face correspondence. Obviously, this leaves another algorithm which constructs both the set of vertices and their respective point positions in some global euclidean coordinate system, but also a given inter relation between the vertex indices and, for instance, their face and edge data structures.

Probably the simplest of organizational structure of vertex container data, follows an ordering regimen that is not unlike reading the data say from the page of a book. Whether an object topology is read from top to bottom, and running left to right, followed by the next line feed after one line is completed. The extension of this organizational routine would be applied in so far as face data organization. This does leave however, some potential complications when surface topology is organized a bit differently. Thus it is probably important when reading surface vertex data, that the order reading scheme of vertex data might otherwise be the same if the surface topology were cut and rolled out as a flat piece of paper. Another method that I could think of, in the case of contouring organizes vertex data by way of principle of the contours themselves. Where one say chooses consistently say a twelve o’clock position and then reads clockwise or counter clockwise around the contour, a map is also constructed relating this contour to its child(-ren) successor(s), and then presumably at the same heightmap another contour is read given to some geographic ordering principle, whether this is in the fashion of reading a book from top down, left to right, until all contours at such heightmap level are read. Once completing this cycle a next elevation heightmap band is read repeating the same algorithm. All of this is repeated into all heightmap representations are completed and no further contour data remains. Obviously preprocessing relational data can speed up search and mapping aids between vertex data. For instance, when normal paths are constructed between neighboring contours, neighboring point relations can be pre appended to relational maps that save us having to construct this data on a next go around when appending vertex relational data for constructing faces of the terrain object. Thus in the previous steps above prior to the terrain object construction we actually should have mapped vertex data, and thus likely we should have vertex key data mapped even before we commence in defining the positions of vertices or their faces technically. In this respect then it may also be important for preprocessing face data in so far as vertex ordering before the positions of the vertices have been assigned, and if we have done this, likely it may be a small step in mapping coordinate data as this could also be easily computed in the processing stages above. With these maps having been constructed implementation may already be done for us if we consider the ordering of how we are processing data...its not so hard to add a few steps that save us a lot of added work later if we consider well the process of computations here.

Thus I recommend actually if in defining contours, using the routine of building a family of functions both of contours and normal curves on such contours, also simultaneously indexing vertex coordinate data, as well as mapping face data by vertex indices...customarily mapping face data amounts to building the face using the vertex’s index position followed by any neighboring vertices forming the face in a clockwise or counter clockwise manner. Thus a square face could be represented as [0,1,2,3] where each integer position represents the stored vertex’s index position in the vertex coordinate data container that might look like [[0,0,0],[0,1,0],[1,0,0],[0,0,1]] is probably the most common representation of a face. A face can have more and less than four vertices representing a face (called triangles and faces likely having poles...when they have less or more than 4 edges).

Summation: Likely the nearest neighbor vertex solution coupled with resolution dynamics to a given surface topology, could make for easier implementation for building curve contours. Albeit you’d still need to determine the contours using a similar method as defined above as in constructing contours that involved building a family of spline functions, that is in defining where the edge boundaries of the contour curves exist, the process should be much the same. There appears to be some added labor involved in functional curve building (spline building case), but on the other hand allows us to specifically tailor our terrain topology as needed by vertices that we choose to represent in such geometry, such method not only optimizes potentially a better representation of terrain terraced surface topology but allows us to represent by whatever desired resolution in so far as our application is desired. (where surface spatial metrics between points are the same). The more crude method allows us to change the resolution of the grid to more closely approximate the contour data, but may do this with some added computational and rendering expense. I’d leave it up to the reader to see if there were any appreciable difference in so far as computational expense for either method. Lastly, as has been pointed out with respect to the matter of organic topology if surface topology could be given the same respect in terms of structure, one could appreciate the sorts of flowing structure better represented in terms of surface terrain allowing for some furthered ease in changing terrain structures. Often times I imagine this is an easier point to neglect since one could seldom think less of terrain animations...for instance, hills need not eyebrows that need be easily moved up and down, or have some skeletal muscle structure in a similar way that might simulate animated motions, for instance, a smile, a frown, or anything of this sort that might more amply necessitate or provide furthered use for better surface topology, on the other hand, maybe some practical use could be had of the type of well contoured surface topology that might be had relative to other approximating cases? In any event, this could at least be eye candy for 3d terrain modelers.

Brush off the math books again...all of this entails use of linear algebra, calculus, and so forth. Some key things to be implemented: Computation of the cubic spline equation. Computing derivatives, computing arc length, and trigonometry, or if you were interested in the mathematics of geography, this is an excellent applied mathematics project.